We’ve all seen the science-fiction movies with hordes of scary robots coming to life and taking control of human civilization. Artificial intelligence (AI) gone wrong… right? But what really is modern-day AI, and how do we use it to our advantage? Could it ever spiral out of control? It turns out that it’s a lot less scary than most people might think and a whole lot more practical for today’s industry.

There are many forms of AI. For example, the algorithms in your web browser remembering and learning your favorite sites and vendors, tailoring the ads on the side to similar products you’ve viewed in the past: That’s AI at work.

Even something as simple as the “One-Click Chat” feature from ride-sharing company Uber’s app, which allows drivers to formulate quick, personalized, on-the-go responses to customers wondering how much longer they’ll be waiting. Digital voice assistants such as Alexa, Siri, Portal, and Google, all fall in this group, too.

There are also the robotic platforms that exercise learning algorithms to behave more human-like. We’ll see that with most types of AI, whether it be machine learning, language processing, or big data management, there are commonalities with software and hardware design methodologies, and an entire industry supporting it.

A brief history of artificial intelligence

The idea of AI dates back to a bronze machine named Talos, a character from the Greek third century B.C. epic poem “Argonautica”, who protects and defends the island of Crete from invaders. Not exactly an army of autonomous robots, but technically an early form of AI. Of course, “real life” AI hadn’t really taken form until the invention of computers.

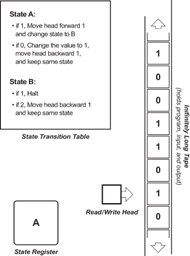

Alan Turing, an English mathematician known for successfully cracking Nazi codes during WWII, was one of the first computer scientists incorporating algorithms and computation based on what he called the “Turing machine.” Arguably, the Turing machine was an elementary model or state diagram of the world’s first computer and utilized computing principles that are still widely used today. A Turing machine (shown in Figure 1) can take many different mechanical forms but incorporates a common set of rules, states, and transitions that can be used to solve complex functions.

Figure 1: A Turing machine showing state transitions and conditions (Image: Jonathan Bartlett, ResearchGate.net)

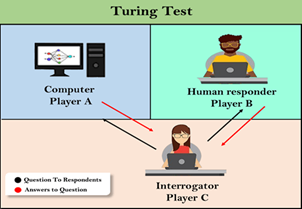

In addition to performing tasks and simple algorithms, Turing took it a step further and thought, “How can we make a machine think for itself?” In 1950, Turing developed a test method that demonstrates if a machine could be programmed to exhibit behavior that was indistinguishable from a human. It was called the Turing test. The basic principle of the test involves a third party communicating with both a human and machine. If the third party can’t tell which responder is the machine, then the machine has successfully shown human-like behavior, which could be considered “thinking for itself.” This test (shown in Figure 2) had many objections and criticisms but was no doubt a fundamental building block for the practice of machine-based artificial intelligence.

Figure 2: Visual depiction of a Turing test (Image: Javatpoint.com)

Over the next 50 years, AI development would improve with faster computers that could store more information and solve difficult problems. According to Moore’s Law, advancements in technology and the ever-decreasing size of transistors allows for the memory and speed of computers to double each year. Whether or not this concept still holds up today can be debated, but with increased computational power and highly complex algorithms, the industry has integrated AI into some very interesting applications.

The subsets of AI and their uses

There are many subsets of AI, but some of the most common are machine learning, natural-language processing, expert system, robotics, machine vision, and speech recognition. Each of these concepts have already been applied to our industry today, so let’s explore.

One application of AI in the marketplace is machine vision. If a machine uses a camera to process images and look for specific things, can it get better at recognizing its target over time? With advanced software techniques that incorporate both image recognition and learning, this has proven to be highly effective and practical. Camera systems using AI-based image recognition are available for a variety of uses including security, medical science, and the food industry.

Another common application is machine and deep learning. IBM’s Watson Marketing (now branded as Acoustic) makes use of AI-powered analytics that use machine learning to help tailor and personalize advertised messages on platforms such as Facebook. And then there are digital assistants such as Siri or Alexa, which use language-processing algorithms to parse and respond with intelligible answers that are human-like in nature.

An interesting branch of AI is “expert system”, which takes many different forms but has one common task: to make knowledge-based decisions that would normally be done by humans. An expert system is typically a computer program that models a human’s expertise in a specific area and, by following a set of facts and rules, can use logic and a “knowledge database” to come to its own reasonable conclusion. Some examples of this are: Google search suggestions, making a medical diagnosis, and chemical analysis.

AI hardware and its future

Up to this point, we’ve seen that all of these AI subsets are programmed by software, but what about the hardware that powers them? It turns out that in recent years, hardware developers have figured out how to design and produce computer systems that help accelerate these AI algorithms and applications. There are even AI-based chipsets configured specifically to work as high-throughput systems that use parallel processing, dataflow management, and intensive graphics processing (for applications such as machine vision).

While there are some application-specific integrated circuits (ASICs) that have been developed for AI, other common off-the-shelf chipsets or chip types, such as field-programmable gate arrays (FPGAs), are flexible and can be internally wired and programmed to accelerate AI-based functions and algorithms. For this purpose, FPGA giant Altera was recently acquired by Intel to further expand its AI portfolio.

Another recent acquisition by Intel was the company Habana, which has developed an AI processor (shown in Figure 3) featuring an integrated remote direct memory access (RDMA) over Ethernet to help with faster computation over a network and allow for scalability at a low cost. Studies have shown that these lower-latency AI processors outperform traditional server systems and computers by factors of up to 2 for question answering and factors of almost 4 for image processing. How do these AI processors do it so much faster? The chip developers plan for high memory usage and optimized computation methods for AI-specific tasks.

Figure 3: Habana’s Gaudi AI processing board (Image: Habana.ai)

But Intel isn’t the only company interested in developing hardware and software to support AI. There are several startups currently working on AI solutions to address these needs in today’s industry, such as cloud company Graphcore, based in the U.K., which has seen almost half a billion dollars in funding. Wave Computing, a company based in California, is developing an AI processor tailored to deep-learning algorithms. Another California-based company, SambaNova, is specializing in big data analytics and management running on its own AI processor which, in SambaNova’s own words, “a fraction of our chip [represented in the white box in Figure 4] is faster than your entire GPU.”

Figure 4: SambaNova’s AI processor (Image: SambaNova.ai)

In addition to these startups, many small companies with big ideas have received considerable funding to develop software and hardware specific for AI.

While not so much terrifying as it is exciting, many big things are expected to come from the AI sector in the years ahead.